The Idea

The whole world is fascinated by Chat GPT, and of course we at PROTOS have also discussed it extensively. The more we talked about it, the more curious we became and we realized that we wanted to find out how we could integrate Chat GPT into our daily workflow. Is Chat GPT a real cloud expert?

At our last team event, we immediately got to work. The idea behind it? We take a real customer request and give Chat GPT the tasks that we normally complete after receiving an initial customer request.

We asked ourselves the following question:

How far can we use AI to solve a real customer project in AWS?

The project:

We received a fairly vague customer request email requesting multiple AWS services. In particular, the customer needs a virtual machine on AWS with certain hardware specifications and a MariaDB database. The email did not describe the existing infrastructure and left us unclear how critical the new AWS services will be in terms of availability, security, etc.

The workflow:

How close is the AI response to the customer request to AWS best practices:

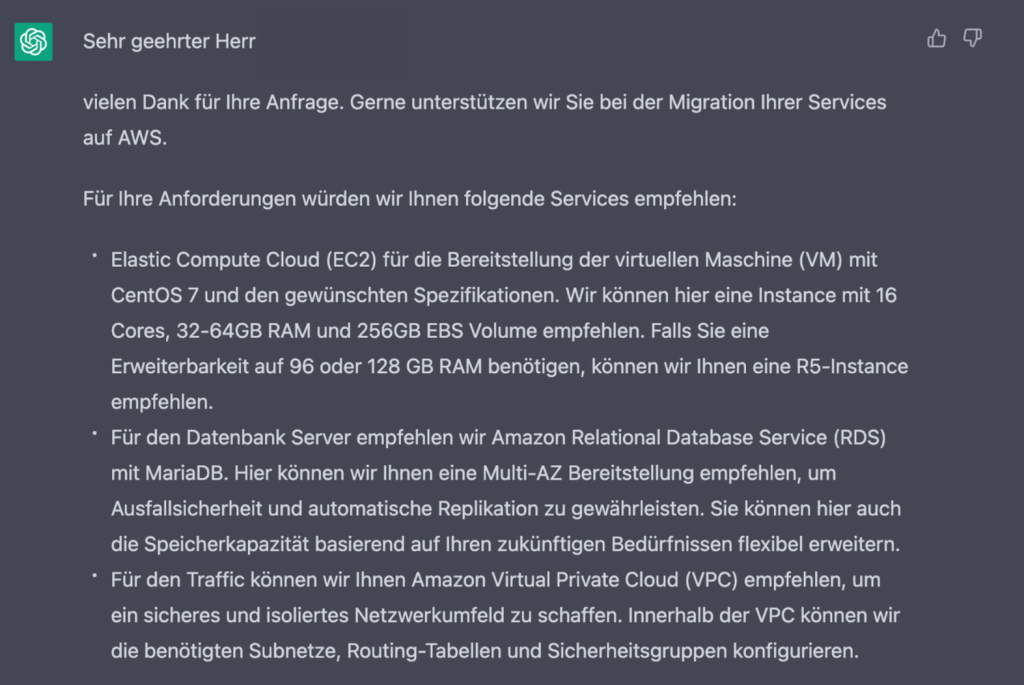

- Requesting ChatGPT to provide a response to the customer’s request

- The AI gave a relatively meaningful answer in the form of an email, which can easily be used for further communication with the customer. No assumptions were made about an existing infrastructure. The AI simply solved the request and provided a specification for an EC2 instance and an RDS with MariaDB.

- The AI even provided the steps for an implementation of the given infrastructure, such as design, configuration, migration and testing.

- After testing, the AI is able to add more services: we requested automatic failover for the EC2 instance, and it suggested using an ELB along with Route53.

- The AI is well able to respond to an initial customer request if it is not explicitly specific or related to technologies unknown to the AI.

How well can the AI translate its original answer into code?

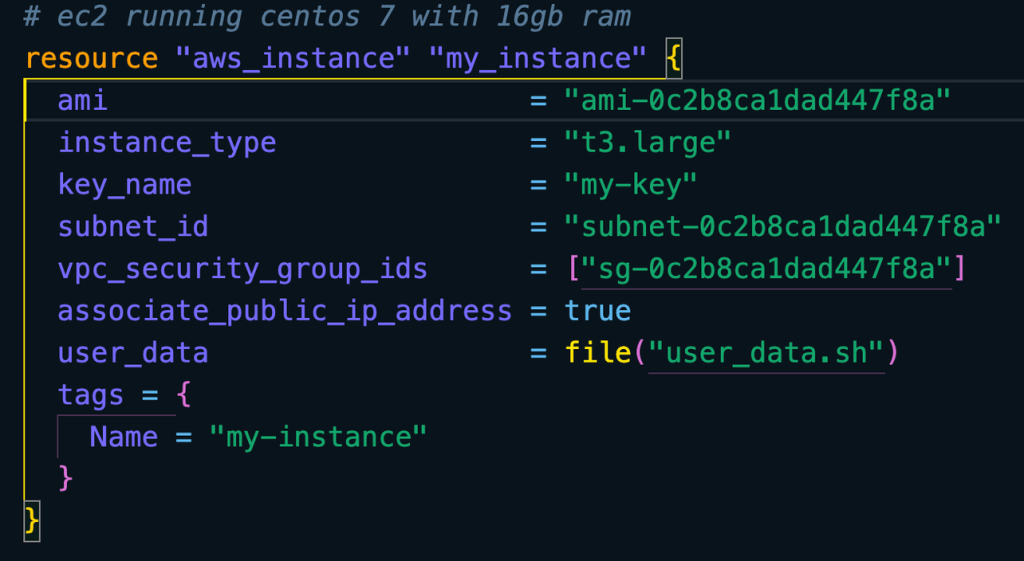

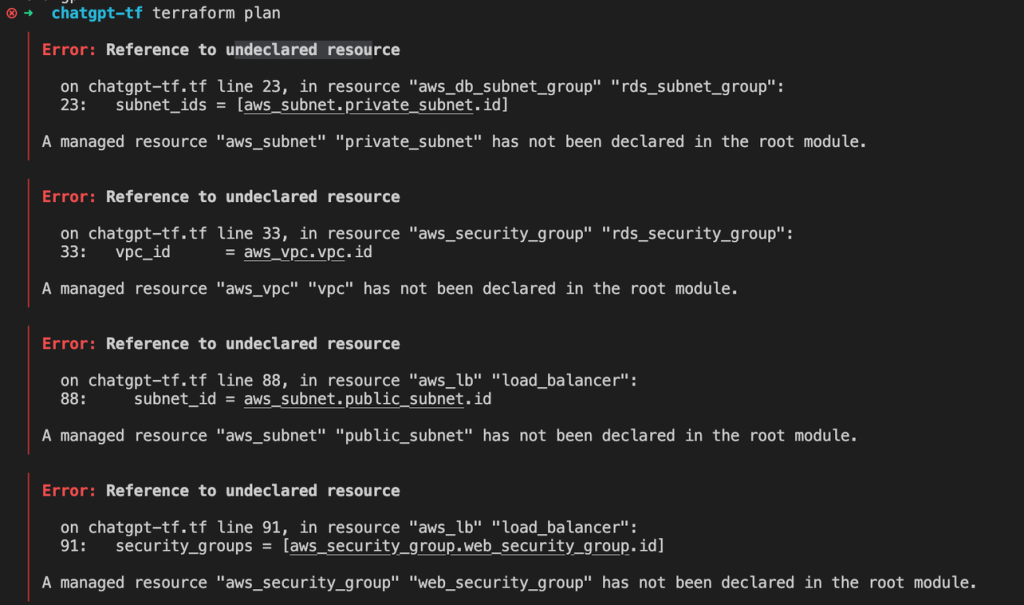

This is where it can get tricky: ChatGPT will run out of data after 2021. As a result, some of the syntax it provided for Terraform was no longer valid. However, Chat GPT was smart enough to provide a valid VPC configuration with public and private subnets and the appropriate security groups. Interestingly, the software assumed that the EC2 instance would serve as a web server since the requirement was to run CentOS on it.

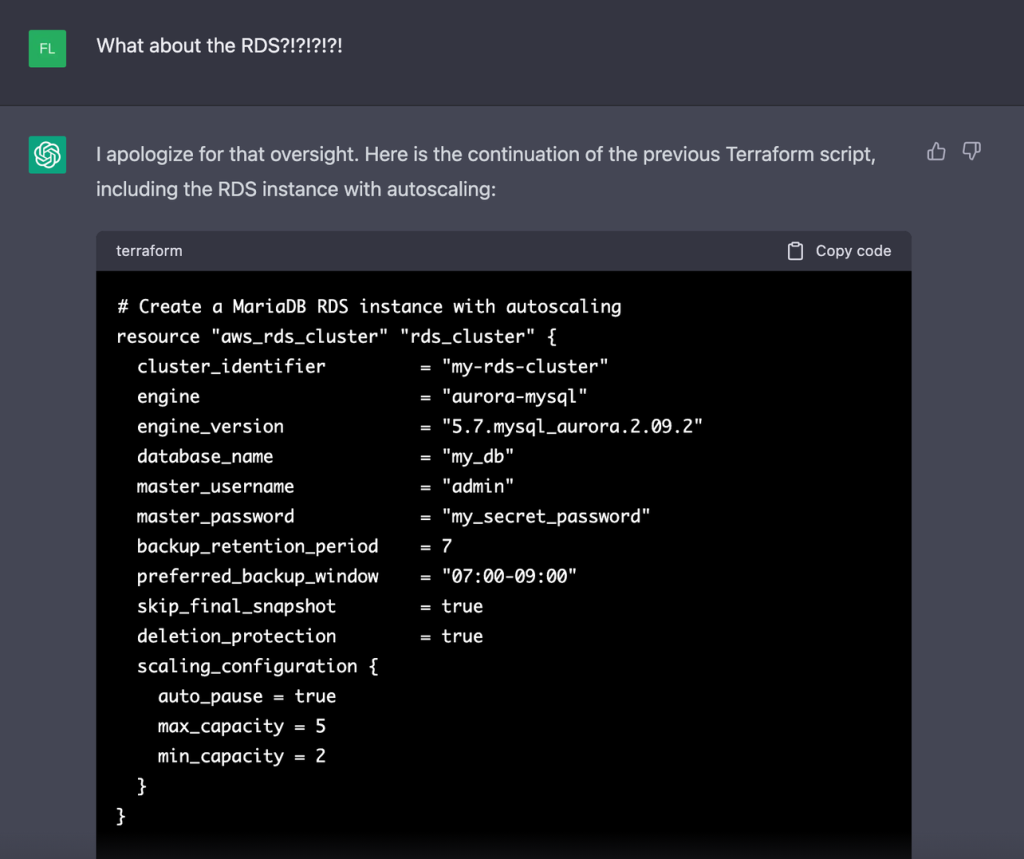

Also, ChatGPT seems to have forgotten the RDS. Consequently, a security audit is crucial when using ChatGPT to create an AWS infrastructure.

With the appropriate requests (“add rds”, “use region eu-central”, etc.), it was able to create an infrastructure that covered the original customer requirements. However, the response had syntactic errors as Terraform has made several updates since 2021.

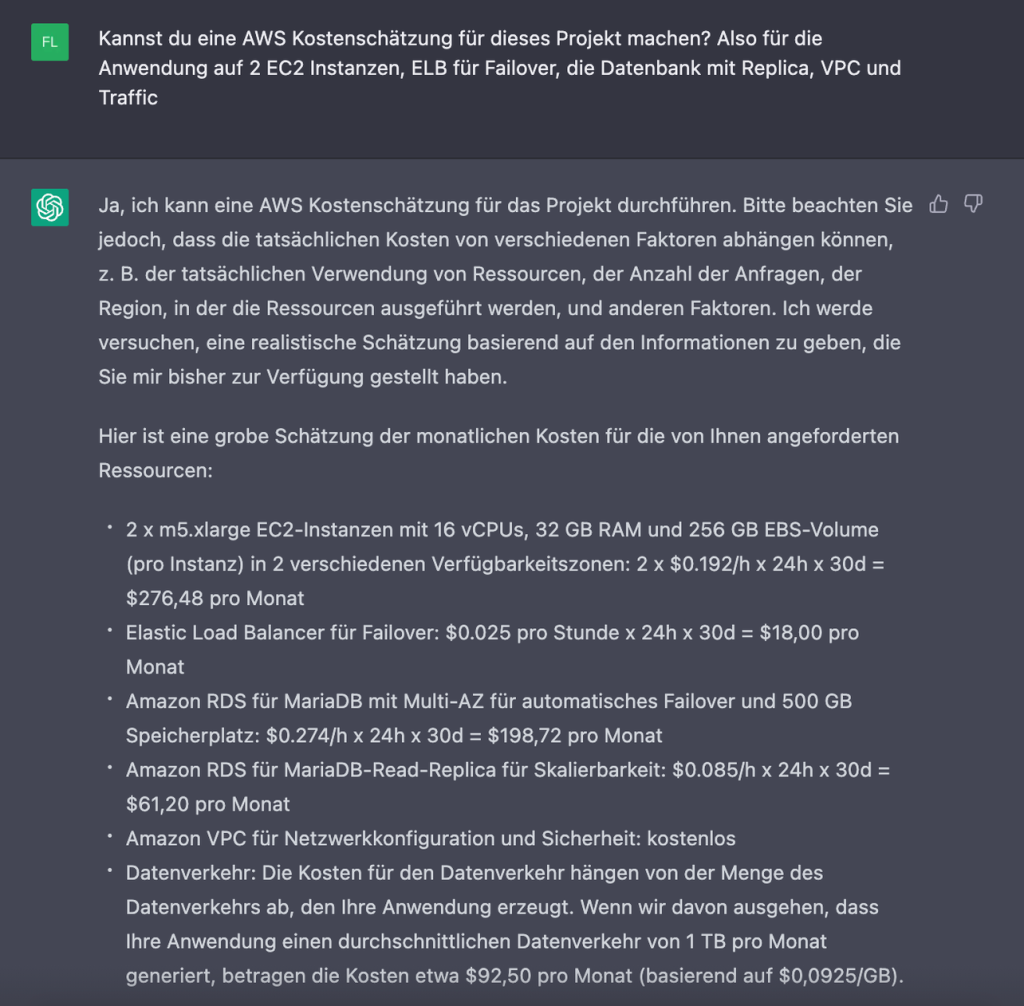

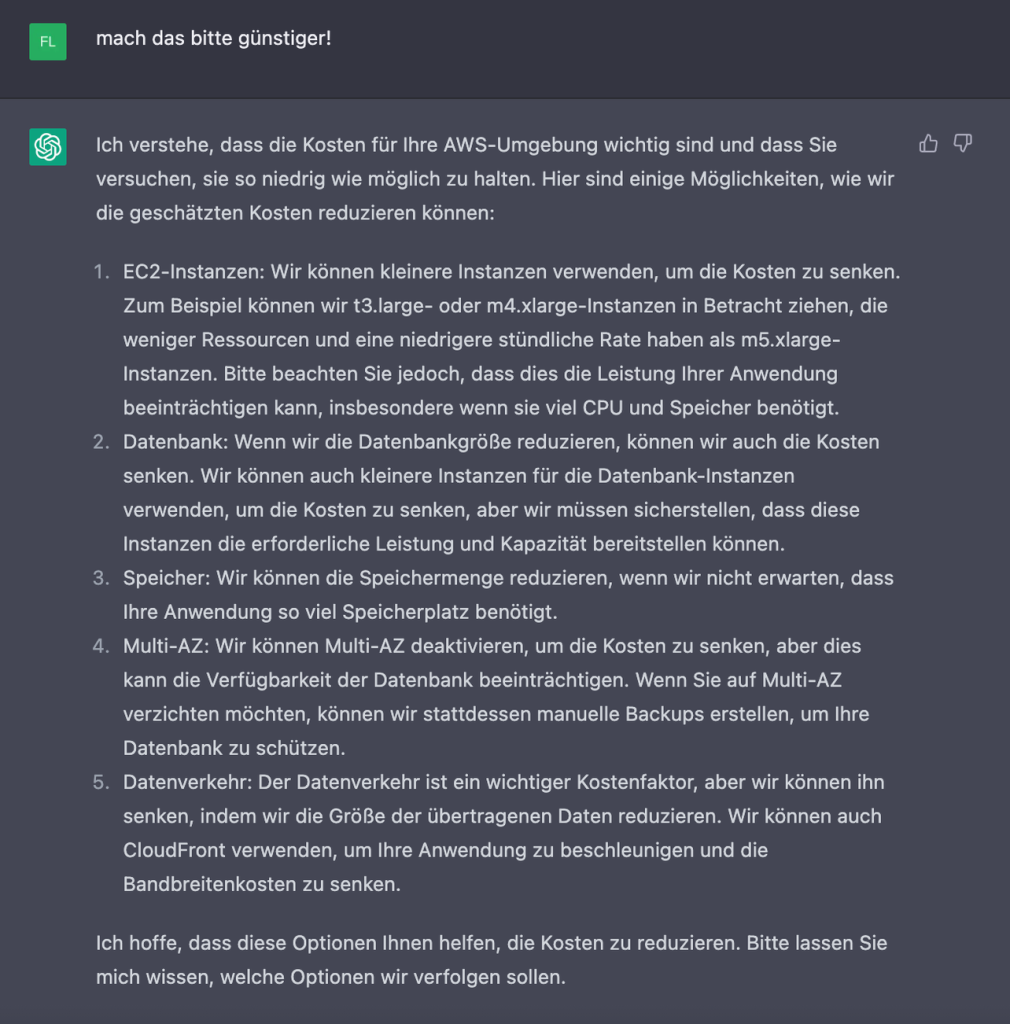

An estimate was even made which could serve as a very arbitrary value (40-50% deviation).

Conclusion

How far can we use AI to solve a real customer project in AWS?

Despite some vulnerabilities of Chat GPT, in less than an hour of work we were able to create a solid base to answer the customer request. The biggest issues were debugging the code generated by the AI and developing an understanding of when the AI cannot debug itself and a human developer should rely more on conventional debugging/research techniques.

For developers, this means that Chat GPT can be a useful tool to automate certain tasks related to customer inquiries in the cloud. It can help to quickly deploy basic infrastructure and generate recommendations. However, developers need to be aware that the code generated by the AI may contain bugs and does not always follow current best practices. Therefore, it is important to carefully inspect, adjust, and debug the generated code. Human developers remain essential to validate the work of AI, handle complex scenarios, and ensure a quality solution for customer projects on AWS.

Source: https://www.protos-technologie.de/2023/06/13/chat-gpt-und-aws-eine-ehrliche-einschaetzung-der-ki-unterstuetzung-fuer-kundenprojekte/