AI cannot count the letter “R” in the word “strawberry.” But why is it that most systems fail at such a relatively simple task?

Artificial intelligence and especially large language models (LLMs) can perform many tasks in an impressive way. With tools like ChatGPT or Google Gemini, writing essays and solving complex equations is often no longer a problem.

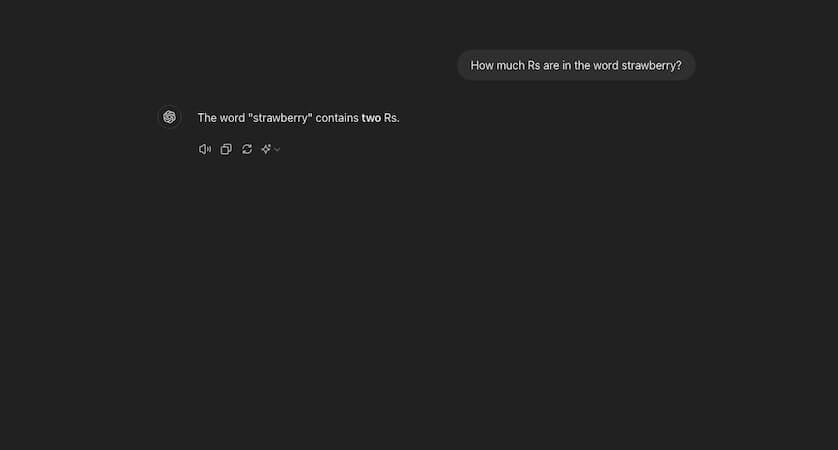

But in some cases, AI systems fail at simple things, like correctly spelling a single word. For example, it is common for artificial intelligence to give the wrong answer when asked how often the letter “R” appears in the word “strawberry.”

These errors make it clear that although AI systems are extremely powerful, they are not human. Algorithms do not “think” like we do and therefore have no understanding of basic linguistic concepts such as letters or syllables. But why are complex mathematical formulas often no problem, while the English word “strawberry” throws almost all models off track?

AI cannot count “R” in “Strawberry” – because it is split into tokens

This is mainly because LLMs are based on transformer architectures. These break down the text into so-called “tokens”. Depending on the model, these tokens can represent whole words, syllables or individual letters. A tool converts the entered text into a numerical representation, which is then processed by the underlying AI system.

For example, the AI might know that “straw” and “berry” together make “strawberry.” But it doesn't understand exactly which letters the word consists of. This mechanism makes it difficult for the AI to recognize exact letters or their number in a word.

One of the biggest challenges in this problem is defining what a “word” means to a language model. Even if it were possible to create a perfect token vocabulary list, LLMs would likely still struggle to process more complex linguistic structures.

Different languages follow different grammatical rules

It becomes particularly difficult when an LLM has to learn several languages. Some languages, such as Chinese or Japanese, do not have spaces to separate words. This makes tokenization even more complex. One possible solution would be for language models to work directly with individual characters instead of tokenizing them.

But right now, this is too computationally intensive for transformer models. As technologies evolve, it remains to be seen how well future AIs will be able to handle these challenges. Perhaps the sheer infinite processing power of a quantum computer will one day allow artificial intelligence to absorb and understand grammar like a human.

Also interesting:

Source: https://www.basicthinking.de/blog/2024/09/06/ki-kann-buchstaben-r-in-strawberry-nicht-zaehlen/